W dynamicznym świecie inżynierii danych dostarczanie niezawodnych, przetestowanych i wysokiej jakości pipeline’ów danych ma kluczowe znaczenie. Ręczne wdrożenia, niespójne środowiska i brak właściwej kontroli wersji szybko prowadzą do błędów, opóźnień i znaczącego zużycia zasobów. W tym miejscu wchodzą do gry Continuous Integration (CI) i Continuous Delivery (CD), zmieniając sposób, w jaki inżynierowie danych zarządzają i wdrażają Databricks Workflows.

Połączenie szablonów Databricks Asset Bundles (DAB) z wiodącymi platformami CI/CD, takimi jak Azure DevOps Pipelines lub GitHub Actions, zapewnia potężne ramy do automatyzacji całego cyklu życia pipeline’ów danych. Umożliwia przejście od rozproszonych, podatnych na błędy procesów manualnych do uspójnionej, powtarzalnej i skalowalnej strategii wdrażania.

Dlaczego CI/CD to „game‑changer” dla pipeline’ów danych

Tradycyjnie rozwój pipeline’ów danych pozostawał w tyle za developmentem oprogramowania pod względem przyjmowania dojrzałych praktyk CI/CD. Jednak w miarę wzrostu złożoności architektur danych i kluczowej roli danych w operacjach biznesowych rośnie potrzeba automatyzacji i niezawodności.

Wdrożenie CI/CD dla Databricks Workflows przynosi wiele korzyści:

- Spójność i powtarzalność: Eliminacja syndromu „u mnie działa”. Pipeline’y CI/CD gwarantują, że każde wdrożenie przechodzi przez ten sam zautomatyzowany zestaw kroków, ograniczając błędy ludzkie i zapewniając spójne konfiguracje w środowiskach dev/test/prod.

- Szybsze, bezpieczniejsze wdrożenia: Automatyzacja testów i wdrożeń pozwala na częstsze releasy z większą pewnością. Problemy są wychwytywane wcześnie, co redukuje ryzyko awarii na produkcji.

- Kontrola wersji i audytowalność: Każda zmiana w kodzie pipeline’u i konfiguracji jest śledzona w Git. Zapewnia to pełny ślad audytowy, ułatwia revert i wspiera zgodność.

- Lepsza współpraca: Zestandaryzowane procesy developmentu i wdrożeń poprawiają współpracę między data engineerami, data scientistami i analitykami.

- Mniej pracy manualnej: Odciążenie inżynierów danych od powtarzalnych zadań, aby mogli skupić się na funkcjach i złożonych wyzwaniach danych.

- Testy automatyczne: Integracja testów jednostkowych, integracyjnych i jakości danych bezpośrednio w CI gwarantuje, że zmiany nie psują istniejącej funkcjonalności ani nie degradują jakości danych.

Rola szablonów Databricks Asset Bundles (DAB)

Databricks Asset Bundles (DAB) to duży krok naprzód w zarządzaniu zasobami Databricks. W istocie DAB to deklaratywny sposób definiowania zasobów workspace’u Databricks — takich jak Databricks Workflows (Jobs), Notebooks, pipeline’y Delta Live Tables (DLT), eksperymenty, a nawet service principals — przy użyciu plików konfiguracyjnych (YAML).

Szablony DAB idą o krok dalej, dostarczając wielokrotnego użytku, parametryzowalne „blueprinty” dla tych zasobów. Zamiast ręcznie tworzyć każdy job czy notebook, definiujesz szablon z ujętymi wspólnymi wzorcami i konfiguracjami.

Kluczowe zalety szablonów DAB w CI/CD:

- Standaryzacja: Wymuszanie spójnych konwencji nazewniczych, konfiguracji klastrów i ustawień jobów w projektach — kluczowe dla utrzymania i skalowalności.

- Parametryzacja: Definiowanie zmiennych w szablonach (np. nazwa środowiska, rozmiary klastrów, ścieżki storage). Pipeline CI/CD wstrzykuje wartości w zależności od środowiska (dev/test/prod). Przykład: jeden szablon DAB dla joba ingestującego dane może w dev używać raw_dev_path, a na produkcji raw_prod_path.

- Modularność i reużywalność: Rozbijanie złożonych pipeline’ów na mniejsze, łatwiejsze w utrzymaniu bundl’e. Zespoły mogą współdzielić i ponownie wykorzystywać definicje jobów.

- Przyjazne kontroli wersji: Definicje DAB są w czystym tekście (YAML) — idealne do systemów Git.

- „Infrastruktura jako kod” dla Databricks: Traktowanie jobów i zasobów Databricks jak kodu, co umożliwia automatyczne wdrożenia i zarządzanie stanem.

Budowa pipeline’u CI/CD: Azure DevOps lub GitHub Actions

Zarówno Azure DevOps Pipelines, jak i GitHub Actions to dojrzałe platformy CI/CD z bardzo dobrą integracją z Databricks. Mimo różnic w składni i terminologii, trzon budowy pipeline’u jest podobny.

Wspólne etapy pipeline’u

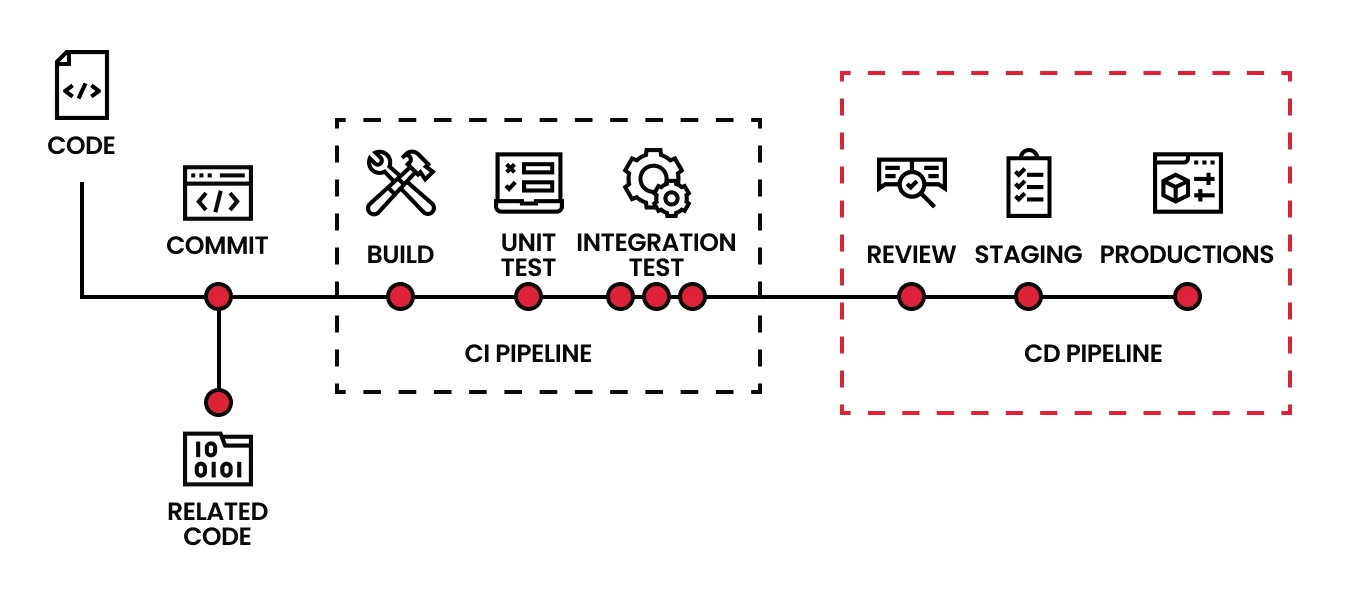

- Trigger:

- CI Trigger: Automatyczny start po każdym commicie do wybranych branchy (np. feature/*, main, develop).

- CD Trigger: Często ręczny lub po udanym buildzie CI na gałęzi release (np. main).

- Checkout kodu:

- Agent CI/CD pobiera repozytorium z Notebooks Databricks, szablonami DAB (.dab.yml), skryptami pomocniczymi i testami.

- Instalacja zależności i build/package (CI):

- Instalacja bibliotek Pythona, konektorów Spark itd.

- Walidacja szablonów DAB pod kątem składni i konfiguracji. W większych projektach: budowa wheel/JAR.

- Uruchomienie testów (CI):

- Testy jednostkowe: Funkcje Pythona lub logika Sparka w notebookach (lokalnie lub na małym klastrze Databricks).

- Testy integracyjne: Tymczasowe wdrożenie joba do dev workspace’u Databricks i testy z systemami zewnętrznymi (np. odczyt/zapis do testowego data lake).

- Testy jakości danych: Frameworki typu Great Expectations lub Deequ na testowych zestawach.

- Korzyść: Wczesne wychwycenie regresji i niespójności danych.

- Build artefaktu (CI):

- Spakowanie przetestowanego kodu, plików konfiguracyjnych DAB i potrzebnych bibliotek do artefaktu przekazywanego do etapu CD.

- Wdrożenie do środowiska (CD):

- Tu kluczowe są komendy DAB. Z użyciem Databricks CLI (obsługuje DAB) pipeline uwierzytelnia się do docelowego workspace’u (Dev/QA/Prod).

- Uruchamia np. databricks bundle deploy --target z parametrami środowiskowymi. Komenda czyta pliki YAML DAB i tworzy/aktualizuje Workflows, Notebooks i inne zasoby.

- Uwierzytelnianie: Bezpieczna obsługa z użyciem Service Principals i Azure Key Vault (sekrety). Pipeline pobiera tokeny dostępu lub dane SP z Key Vault.

- Korzyść: Zautomatyzowane, spójne wdrożenia między środowiskami.

- Weryfikacja po wdrożeniu / smoke tests (CD):

- Krótkie sanity checki, czy joby startują i mają dostęp do zasobów; często wyzwolenie małego testowego runu.

Implementacja w praktyce: kluczowe aspekty dla inżynierów danych

- Struktura repozytorium:

- src/: Notebooks Databricks (.py, .ipynb, .sql)

- bundle.yml: główny plik konfiguracji DAB

- resources/: podkatalogi z definicjami jobów (jobs/, dlt_pipelines/), opcjonalnie wiele plików DAB dla modularności

- tests/: skrypty testów jednostkowych i integracyjnych

- .github/workflows/ (GitHub Actions) lub azure-pipelines.yml (Azure DevOps): definicje pipeline’ów

- Strategia parametryzacji:

- Definiuj parametry w bundle.yml, np. var.env,{{ var.env }}, var.env,{{ var.cluster_size }}.

- W pipeline CI/CD przekazuj je do databricks bundle deploy za pomocą --var env=dev lub --var cluster_size=small. To umożliwia wdrażanie tego samego bundle’a w różnych środowiskach z odmiennymi konfiguracjami.

- Uwierzytelnianie i sekrety:

- Nie hardkoduj poświadczeń. Używaj Service Principals (dla Azure DevOps/GitHub Actions) z właściwymi rolami w Databricks i zasobach Azure.

- Przechowuj sekrety SP w Azure Key Vault.

- Pipeline powinien bezpiecznie pobierać sekrety z Key Vault i wykorzystywać je w Databricks CLI. Azure DevOps i GitHub Actions mają natywne integracje z Key Vault.

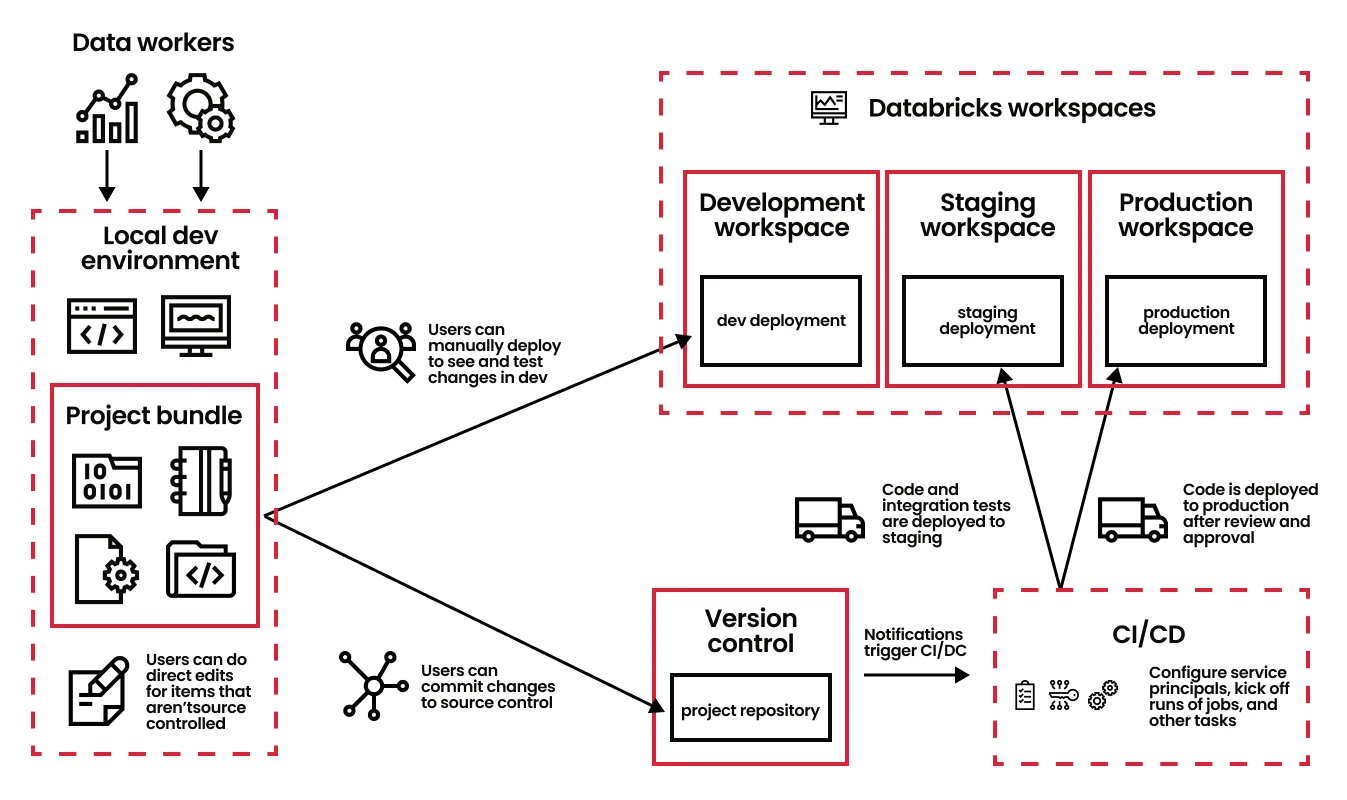

- Strategia środowisk:

- Oddzielne workspaces Databricks dla Development, QA/Staging i Production.

- CI wdraża do dev do testów.

- CD wdraża do QA po udanym CI, następnie do Production po QA i ewentualnych ręcznych aprobatách.

- Testowanie w Databricks:

- Testy jednostkowe: uruchamiaj pytest na agencie CI/CD.

- Testy integracyjne/jakości danych: provision tymczasowego klastra Databricks w pipeline, uruchom testowe notebooki/skrypty na klastrze, a po testach go terminuj — gwarantuje to zgodność środowisk.

- Integracja Delta Live Tables (DLT):

- Szablony DAB w pełni wspierają definicje pipeline’ów DLT. CI/CD może wdrażać DLT równie łatwo jak zwykłe Jobs, zapewniając spójne governance i wdrożenia dla ETL.

Konkluzja: Przyspieszenie drogi w inżynierii danych

Przyjęcie CI/CD dla Databricks Workflows, zasilanego szablonami DAB i zintegrowanego z Azure DevOps lub GitHub Actions, nie jest już luksusem — to konieczność dla nowoczesnych zespołów data engineering. Zmienia sposób tworzenia, testowania i wdrażania pipeline’ów danych, przenosząc najlepsze praktyki z inżynierii oprogramowania do świata danych.

Automatyzując procesy wdrożeniowe, zapewniając spójność i integrując solidne testy, nie tylko redukujesz obciążenie operacyjne i ograniczasz ryzyko, ale także znacząco przyspieszasz dostarczanie wartościowych produktów danych w organizacji. Wykorzystaj te potężne narzędzia i obserwuj, jak Twoje wysiłki w obszarze inżynierii danych stają się bardziej zwinne, niezawodne i wpływowe.