In the early days of Big Data, the path was clear: you wrote a script, attached it to a Cron job, and hoped for the best. If you needed complex dependencies, you perhaps orchestrated it externally with Oozie, Airflow, or Azure Data Factory.

Today, the landscape has shifted. With the maturity of Databricks Workflows and the rise of Delta Live Tables (DLT), Data Engineers are faced with a fundamental choice in architectural paradigms: Imperative vs. Declarative.

As we build scalable pipelines using Azure and Databricks, the question isn't just "which one works?"—it’s "which one fits the engineering culture, maintenance lifecycle, and budget of the project?"

In this article, we’ll explore the theoretical underpinnings, dive into the operational differences, and provide a clear decision framework for implementing your next data architecture.

The Theoretical Foundation: The DAG and The State

To understand the difference between Workflows and DLT, we have to look at Graph Theory and State Management.

Every data pipeline is essentially a Directed Acyclic Graph (DAG). Data moves from node A (Bronze) to node B (Silver), but never circles back.

1. The Imperative Model (Databricks Workflows)

In an imperative model, you are the graph builder. You explicitly define the edges of the graph.

- Theory: You define the Control Flow. You tell the system: "Execute Task A. If successful, execute Task B." This gives you maximum control but demands careful, manual dependency mapping.

- State Management: You are responsible for the state. If the job fails halfway, you must ensure that re-running it is idempotent (guaranteed to produce the same result). This often involves manually configuring checkpoint locations, handling file locking, and managing transaction logs explicitly.

2. The Declarative Model (Delta Live Tables)

In a declarative model, you define the nodes; the system builds the graph.

- Theory: You define the Data Flow. You tell the system: "Table B is defined as a transformation of Table A." You do not tell it when or how to run the intermediate steps.

- Topological Sort: The DLT engine looks at all your table definitions, identifies dependencies, and performs a "topological sort" to determine the optimal execution order. It automatically handles parallel execution paths and task dependencies.

- State Abstraction: The system abstracts the state. It handles the checkpoints, the schema evolution, and the "exactly-once" processing guarantees of Spark Structured Streaming automatically, drastically reducing the boilerplate code.

DLT in Depth: The Declarative Paradigm & Architecture Placement

DLT excels when implementing the Medallion Architecture (Bronze, Silver, Gold). It is designed to define the transformations between these quality layers.

🥉 Bronze Layer: Simplified Ingestion with Auto Loader

DLT works seamlessly with Auto Loader for reliable, incremental ingestion from Azure Data Lake Storage (ADLS) or other cloud storage.

- Architectural Benefit: You simply declare a dlt.read_stream("cloudFiles", ...) and DLT handles the file tracking and exactly-once processing, removing the need for manual configuration of file notifications or complex streaming setup.

🥈 Silver Layer: Data Quality as Code

This is DLT's most valuable contribution. It shifts data quality testing from a post-processing step to an inline validation step.

- Expectations Theory: You define constraints (e.g., uniqueness, non-nullity) using Python or SQL decorators. This enforces a Data Contract on the Silver layer. If data violates the contract, DLT can automatically expect_or_drop the row (quarantine the bad data) or expect_or_fail (stop the pipeline for critical errors).

- Schema Evolution: DLT is highly opinionated on schema handling, often automating the detection and evolution of schemas, a common headache in streaming pipelines.

FinOps and Cluster Abstraction

DLT uses Enhanced Autoscaling by default. Instead of relying on the traditional Spark autoscaler (which can be slow to react), DLT optimizes the cluster size based on the flow rate and latency of the pipeline's execution graph.

- Cost Abstraction: While you lose fine-grained control over the cluster type for individual nodes, DLT’s automatic scaling is often more efficient for variable workloads, translating to lower operational costs in the long run by rapidly shutting down unused resources.

Workflows in Depth: The Imperative Approach & Control Flow

Workflows are your foundation for platform engineering and control flow. They represent maximum flexibility outside of data transformations.

Interoperability and Control Flow

Workflows shine when the pipeline execution is not purely data-to-data:

- Pre-Processing Checks: Running a pre-flight check in Python (e.g., validating permissions in Azure Key Vault or checking file existence via an Azure Function).

- External API Calls: Making a REST API call (e.g., using Postman patterns) to trigger a machine learning model, notify a downstream system, or fetch configuration dynamically.

- Loops and Conditionals: Workflows allow you to define conditional logic (e.g., only run Task C if Task B fails, or if a parameter is set to True).

Maximum Customization and FinOps

For environments with strict budget constraints, Workflows provide the necessary levers for FinOps (Financial Operations):

- Node-Level Optimization: You can specify distinct, optimized cluster configurations for each task. A lightweight SQL query can run on a small serverless SQL warehouse, while a complex PySpark ETL step runs on a highly optimized general-purpose cluster.

- Spot Instance Strategy: You can precisely configure the use of Spot Instances (preemptible VMs) for non-critical, fault-tolerant tasks to maximize cost savings, something DLT abstracts away.

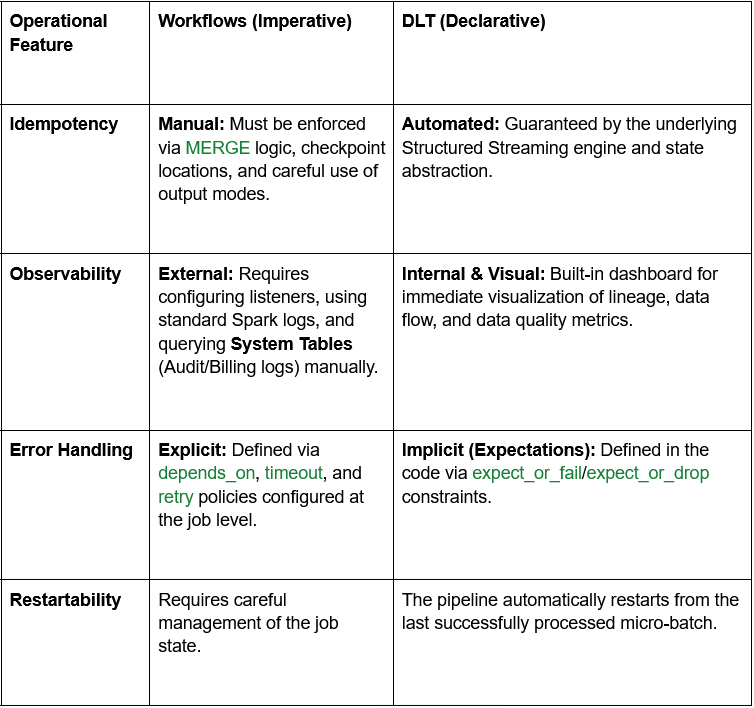

The Operational View: Monitoring and Reliability

Regardless of the choice, reliability is key. Here is how they differ in operation:

The Hybrid Solution: The Workflow Container

In almost all sophisticated enterprise environments, the solution is not one or the other - it is a strategic combination: the Workflow Container Pattern.

- Workflows acts as the Controller (C): It handles the scheduling, the parameters, and the error notifications. It ensures the entire data product lifecycle runs correctly.

- DLT acts as the Engine (E): It is nested inside the Workflow task structure. It executes the core data transformation, guaranteeing quality, high reliability, and handling the complexities of stream state.

This pattern leverages the DLT engine’s automation for the difficult data processing parts while retaining the necessary imperative control to interact with the broader Azure ecosystem (e.g., using an Azure DevOps pipeline to deploy the DLT definition via DABs, and then scheduling the resulting DLT pipeline using a Workflow task).

Decision Framework: When to choose which?